A SOCless Detection Program at Netflix

I am excited to share that we are investing in greater detection capabilities as part of the SIRT mission. There are a number of existing detection efforts across Netflix security teams. This is an opportunity to enable those efforts, while creating stronger alignment between detection and response. Of course this being Netflix our culture and our tech stack loom large in our consideration of how to expand our detection program. We want to avoid the traditional pitfalls and optimizes for our overall security approach. The last thing we want is a bunch of lame alerts creating busy work for a large standing SOC.

Required reading for anyone interested in this area are Ryan McGeehan’s Lessons Learned in Detection Engineerin*g and the Alerting and Detection Strategy work from Dane Stuckey. I have borrowed liberally from their efforts. There are many ways to break this down, but I have settled on the following:

Within these categories, this is what a mature program looks like:

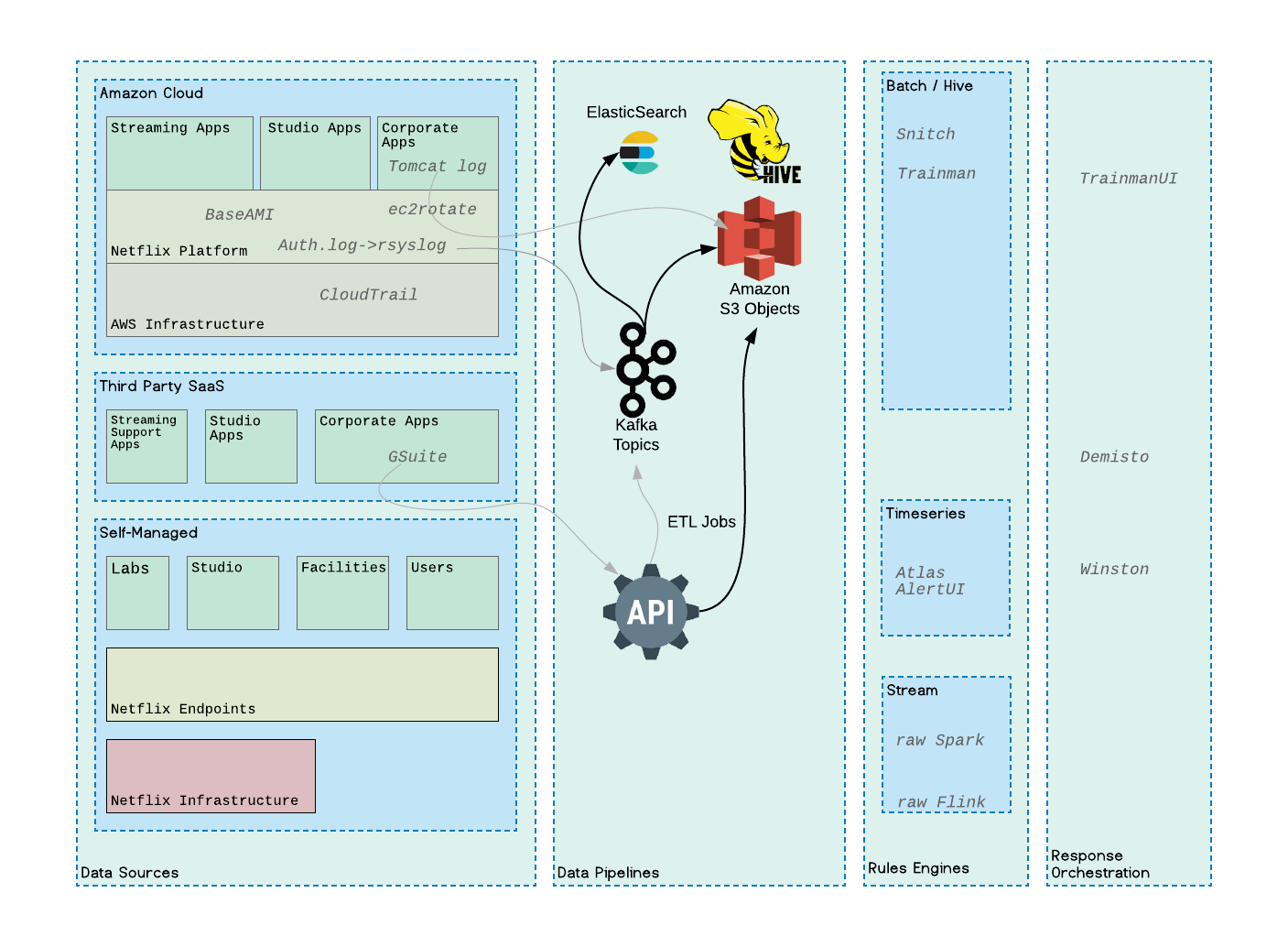

Data Sources

One needs to find out about existing security relevant data as well as create it. This includes data discovery, deployment of instrumentation like eBPF or OSQuery, and working with application teams around logging best practices. It requires documentation of data sets, ideally in a partially automated fashion.

The creation and/or collection of data should be justified by a quantitative reduction in risk to the organization (in dollar terms), but that can be difficult to forecast accurately until you have a chance to explore the data - something a Hunt function is great for.

Data Pipelines (Plumbing)

Plumbing is critical. Not only does the pipeline need to get data from a wide array of sources to a smaller but still diverse set of sinks, it needs to do it efficiently and consider cost vs timeliness tradeoffs. It needs to scale up to meet demands of a growing organization and a growing set of sources. The onboarding process for new data needs to be easy, ideally automated but at least self-serve with partial automation. Onboarding needs to apply some level of schema. We need health checks for changes in data rate and format, along with runbooks on how to troubleshoot and repair flows when they go down.

The pipeline is also responsible for enrichment. Joining external data to flows. For example adding the geo or reputation of an IP address. Some of this is SQL-like joins, other times it is a direct query to a REST API or better yet GraphQL. Again timeliness and costs are a factor.

Rules Engine (Brain)

With data flowing, we can write rules. Documenting rules is key to our approach. This forces a level of rigor in how a rule is implemented - minimize false positives, identify blind spots, ensure data health, and perhaps most importantly defining response actions. An alert without a response plan is worse than no alert at all.

We want to enable the development of new rules as part of our standard security processes, like our post incident reviews. We also want to advocate for detections as primary security controls, alongside preventative controls, in work by other security teams. The detection team will not have a monopoly on rule ideas, and in an ideal state even non-security personnel will be writing rules on our platform, but we will have ultimate responsibility for the quality of alerting.

There may be multiple rules engines for different types of rules - streaming vs batch, SQL-like vs distributed code (spark, flink, StreamAlert), heuristics vs models, etc.

When rules trigger they create new streams of event data and may require additional enrichment and rules. Every triggered rule should fire automation before it fires an alert to a human. When a human gets an alert it should be the right person, with the right context and the right set of options. In our culture the person with the best understanding of the system is the system owner / oncall. This is what I mean by SOCless. These folks are experts in their domains, but likely not experts in security, so we need to provide them with context around what the alert means, and enrichment on the overall state of the system beyond the rule that triggered it, so that they can make a decision on what to do. This blends into response orchestration.

Response Orchestration (Rubber-Road)

When an enriched alert reaches a human, that alert should contain a reasonable set of response actions. ‘Ignore’ would feedback into alert tuning. ‘Redeploy cluster’ might be another, or ‘collect additional data,’ or if it cannot be resolved by the oncall, ‘escalate to SIRT.’ For this to work we need really good alerts. Well documented, enriched and high SNR, along with a set of reasonable actions.

Each alert that fires is a chance to measure our forecasts about risk, so we need to capture feedback on outcomes through our orchestration platform. We also want to make sure our alerts are healthy through offensive unit testing (also referred to as atomic red team tests). We will need to stay highly aligned with the growing red team efforts to best leverage them for testing our overall risk impact and efficacy.

Where we go from here?

I am happy to announce the latest job opening on my team - Detection Lead. I anticipate this role will be a good bit of product leadership and architecture work with possibly some coding to glue things together. Strong program management skills to leverage existing investments, make buy/build decisions and operate across teams will be key. People management skills are not a requirement, although together we will spec out personnel needs. I am extremely excited about this subject and can’t wait to find someone to work with on it. If you want to chat about the approach, or the role, or have a referral please reach out: amaestretti@netflix.com