What makes detecting and responding to malicious activity on information systems so difficult? Incident Response and investigation can be incredibly intellectually rewarding. You get to puzzle together what transpired and take action to defend your systems, while working against a wily and adaptive adversary. On a bad day, however, you will find that most of the puzzle pieces are missing and there is no wily adversary present, only a deluge of false positive security events being happily emitted by an expensive PRNG called a SIEM. The core issues are volume of activity on, coupled with the complexity and change of, the systems you are defending. Traditional IT management compounds these issues rendering effective response impossible. DevOps, microservices and cloud infrastructure, in solving for scalability and development velocity, also offer solutions to these core security problems, and have the potential to Make IR Great Again.

IR allows you to exercise a wide range of security skills. The diversity of events that one encounters ensures a varied and engaging workflow that continues to challenge. It takes not only a keen intellect and technical skill, but a body of experience from which to draw. Often there is no runbook, so you cobble together inferences from similar past cases and drive on. I like this diversity. I also like the idea that there is an intelligent adaptive adversary on the other end of the network. It is like a John LeCarre novel in which my blackhat counterpart is forever scheming to gain advantage; a battle of wits.

Sadly this ideal is seldom a reality in traditional organizations. What should be diverse events become rote response to false positives. The broad range of skills and experiences you have developed go unused, and begin to atrophy. There is no adaptive adversary on the other end of a false positive; only a poorly tuned security appliance. The battle of wits is with your monitoring infrastructure as you try to reconstruct events with missing logs, no network coverage, tainted disks and corrupted memory captures. Eventually alert fatigue sets in and IR turns to hunting or red teaming to try find real leads.

Why? Well, the adversary gets to hide in systems that are busy, complex and changing. We have all met that business critical server that is too fragile to be: imaged, inspected, patched, bounced, or even looked at funny. It has six years of uptime, a fact that its owners are very proud of, and processes some critical portion of the business. A minute of downtime costs more than your annual salary. The owners are constantly interacting with the box cleaning out cache files and tweaking settings to keep their fragile pet alive. If it ever had a baseline that was seven years ago when it was built. The operational team is too busy propping this thing up to even think of documenting what they have done. Change management is overhead they simply cannot (will not) afford.

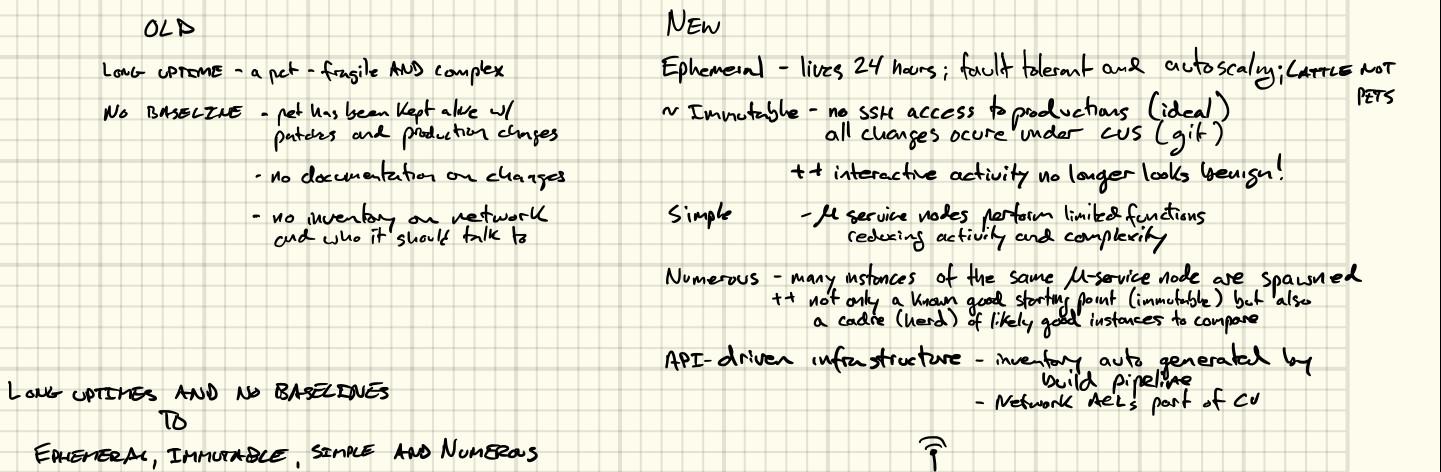

It is nearly impossible to conduct IR with these sacred cows. It is also a huge risk to the enterprise in terms of disaster recovery (critical AND fragile) and a bottleneck for performance inhibiting scalability. Enter DevOps, microservices and cloud infrastructure. Even if a traditional CIO doesn’t think much about security, uptime is very much front of mind. Where security was a potential foe of uptime, your operations were denied, but all that is about to change. My favorite saying from this movement is, “cattle not pets.” You do not name your servers according to some theme (ancient cities!), they get UUIDs. You do not hand-build each server, you deploy them by the tens to tens of thousands. Scaling to thousands of servers means you can’t do this by hand, and you can’t reasonably SSH into each of them - they need to be immutable. Built from source managed in a Content Versioning System (CVS) like Git and deployed via API’s in your cloud infrastructure.

In this scenario you have a baseline in the CVS, a pool of peer devices with which to perform differential analysis, and a disposable (ephemeral) immutable target. Ideally there should not be any interactive administration of these servers. All patches and changes are pushed through the CVS, so if you haven’t removed SSHd it should very seldom be accepting connections in production. This vast reduction in activity and ready-made baselines are a boon to IR.

The ephemeral nature of the environment can be challenging if you are detecting things at traditional speed (days even hours). If it takes an email with an abuse complaint to kick off on incident it is likely that the instance involved has been terminated, along with any logging and artifacts you don’t ship to central storage as a matter of course. The IP address has likely been re-distributed (perhaps to another organization), so while you have much better inventory from the cloud APIs that enable your infrastructure than the traditional model, you probably need to store some history (CloudTrail sadly won’t cut it, but you can get instances to log their IP and application to CloudWatch or other tricks).

In general gathering information needed for a response remains a challenge. There tends to be more raw data generated on cloud infrastructure than in traditional models, but it can be incomplete or spread across various disparate systems. You also have the server layer managed by you, the tenant, that may or may not have strong logging enabled. This is where we are investing in tooling, to automate the process of pulling data from these various sources and present an enriched view of the systems involved in an alert. Reducing false positives has been invested in extensively, but speeding alert resolution is just as, or more, important. This includes automating tooling to contain, eradicate and recover from incidents.

If we can simplify the function of individual components (servers running micro services), limit interactive management (immutable) and leverage cloud infrastructure (ephemeral and api-driven) we can lower the overall volume of activity, decrease the number of alerts and increase their accuracy (through differential analysis of known and likely good baselines), and increase the speed of alert resolution. This allows more time for highly complex investigations that are the most engaging and time for preparation and tool building outside the vicious cycle of reactive fire fighting.