Short of full PCAP it would be nice to capture metadata on network connections to and from an instance. In AWS EC2 it is difficult to perform network monitoring using traditional appliances as inter-instance traffic is not exposed to customers. You can get VPC flow logs which are useful; however, it is still desirable to have both a network and a host perspective on this activity. Both are valuable. VPC logs are difficult to corrupt from the host, and the host has more context on the executables that initiated the communication. So our goal is to collect metadata on network connections, including the remote address and the local process, then correlate that with VPC logs.

Let’s see if we can pull this off with auditd. By monitoring at the system call level this technique should be difficult to subvert. Other methods might include alternate system call monitoring technologies, or various network utilities (tcpdump, iptraf, etc). Using nftables (iptables) logging could work… but onward with auditd.

Curling an Address

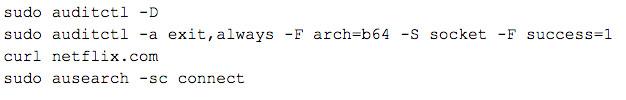

If you want to try this, set up auditd (apt-get install auditd on Ubuntu) to monitor successful socket() system calls using auditctl (reference this tutorial from RedHat).

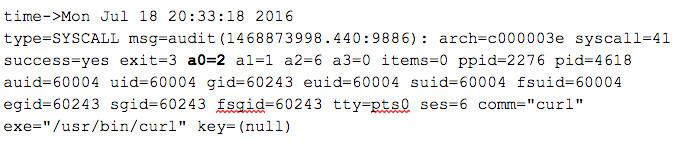

The first step for process communication is to allocate via socket(int domain, int type, int protocol) which results in an entry like this:

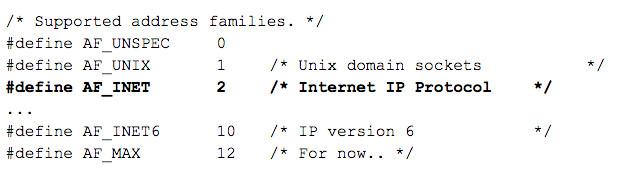

When logged you may notice there are lots of irrelevant socket related calls. Sockets are used for both internet and interprocess communications, so it gets rather verbose. We would like to only capture connections related to internet traffic, or as defined in socket.h: AF_INET and AF_INET6 sockets. Filtering on the domain (aka sa_family) provided in the first argument (a0) we can record every time a program intends to send or receive IP traffic, but we don’t know the address or if they actually passed it.

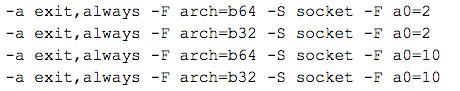

Thus these rules will catch IPv4 and v6 socket allocation for both architectures:

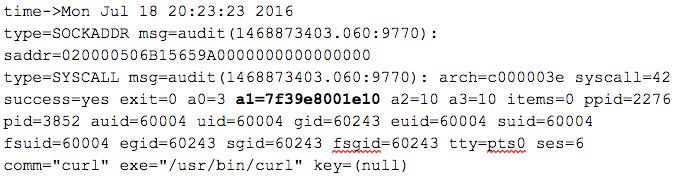

This is a good starting place to detect new/unwanted processes with network intentions, but it would be great to get actual IP addresses to correlate with VPC logs, so we need to look later in the socket lifecycle. For outgoing traffic the relevant system call is connect(int sockfd, const struct sockaddr *addr, socklen_t addrlen). Add a new audit rule (left to the reader) and it generates this entry:

Ideally we could filter for just internet sockets. Unfortunately when connect() is called the domain (sa_family) is part of the sockaddr structure and cannot be filtered with an audit rule flag (see a1, it’s a pointer). We do get sockfd (a0) which defines the socket type (in this case 3 indicates SOCK_RAW, a raw socket) but that is not enough. The remote address is in the same struct. Fortunately on exit the kernel provides saddr which appears to be the actual sockaddr data in hex: saddr=020000506B15659A0000000000000000

Sure enough there is a decoder which yields: family=2 107.21.101.154:80. Note that the first two characters form the hex byte that defines our sa_family, so we want to filter on that for either 02 or 0A (AF_NET = 2 and AF_NET6 = 10)… sadly saddr is not an available field for auidtd to filter on. So if we want the IP address we have to log all the connect() events and then filter at a higher level. This can be done with rsyslog, or perhaps with a new userland audit daemon like go-audit.

On the receive side, to establish a listening port a process creates a socket, binds to it, sets it to listen and then accepts traffic. The accept() command best meets our goal as the remote address is available (on exit) in the same struct as with connect(). bind() is also useful as it provides the listening port (and local IP) which is a relevant event for backdoors even if no one ever connects. We have the same challenges around filtering on sa_family with these receive calls.

To get started with auditing network events I recommend you stick with socket() calls filtered for AF_INET and AF_INET6. If you have a robust ETL pipeline that can perform secondary selection you can easily add connect(), accept() and even bind() calls to your logging. Most of these will be legitimate, but for servers with a constrained set of functions nefarious activity should stand out.